Boost Your Spark Efficiency with Eager Evaluation!

Boost Your Spark Efficiency with Eager Evaluation!

spark.sql.repl.eagerEval.enabled

As Spark enthusiasts, we’re all familiar with its lazy evaluation framework. This means transformations aren’t executed until an action is called. But what if I told you there’s a way to expedite this process?

spark.sql.repl.eagerEval.enabled — a configuration option that can revolutionize your Spark development experience! By enabling this setting, you’ll no longer need to wait for .show() to view your results while developing or testing your code in a notebook.

By default, the spark has this configuration disabled. However, if there is a large dataset involved, it will impact the performance. So, we should use this configuration wisely.

📝 Example: Check out this PySpark snippet to see spark.sql.repl.eagerEval.enabled in action:

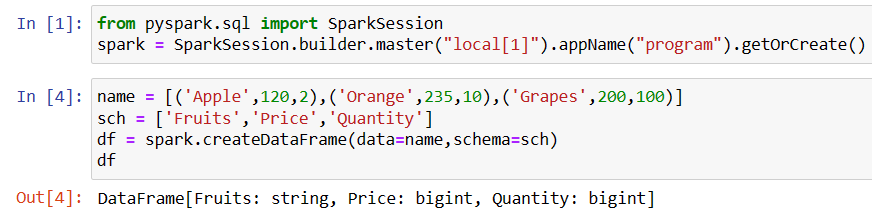

from pyspark.sql import SparkSession

spark = SparkSession.builder.master("local[1]").appName("program").getOrCreate()

name = [('Apple',120,2),('Orange',235,10),('Grapes',200,100)]

sch = ['Fruits','Price','Quantity']

df = spark.createDataFrame(data=name,schema=sch)

df

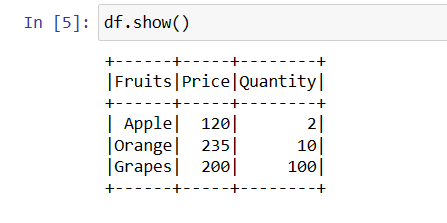

until you call an action, in this case .show(), result will not be displayed.

df.show()

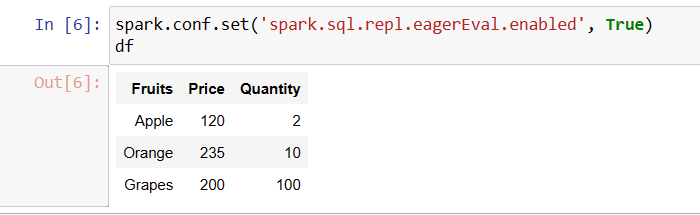

Let us enable this configuration and see the result.

spark.conf.set('spark.sql.repl.eagerEval.enabled', True)

dfHere we did not call the action, we just typed df, and the result is printed.

Simple yet very useful configuration.

No action calls are needed — just type df, and voilà, your results unfurl before your eyes.

Unlock the potential of eager evaluation and propel your Spark workflow to new heights!

Comments

Post a Comment