Troubleshooting “Column Not Callable” Error in PySpark

Troubleshooting “Column Not Callable” Error in PySpark

alias keyword in the agg function, and how to fix it.The Misspelling Issue

One specific cause of this error is misspelling. In this scenario, the alias keyword when using the agg function. For example:

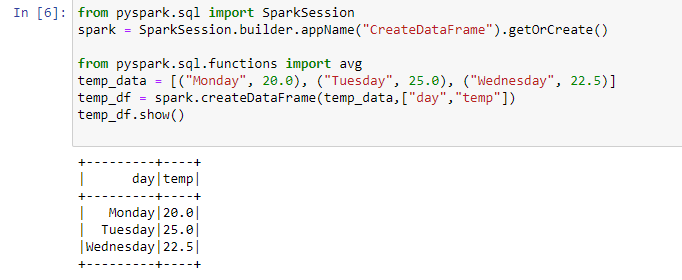

Reading the data

from pyspark.sql.functions import avg

temp_data = [("Monday", 20.0), ("Tuesday", 25.0), ("Wednesday", 22.5)]

temp_df = spark.createDataFrame(temp_data,["day", "temp"])Output of the above code

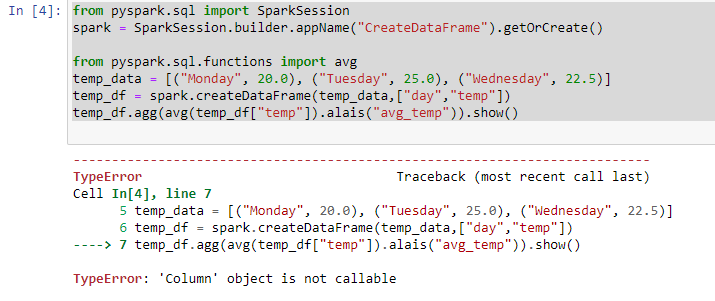

Now let us perform the aggregation:

from pyspark.sql.functions import avg

temp_data = [("Monday", 20.0), ("Tuesday", 25.0), ("Wednesday", 22.5)]

temp_df = spark.createDataFrame(temp_data,["day","temp"])

temp_df.agg(avg(temp_df["temp"]).alais("avg_temp")).show()The output will be...?

If you notice, the spelling of ‘alias’ is misspelled. This could take hours of our time if we did not pay attention.

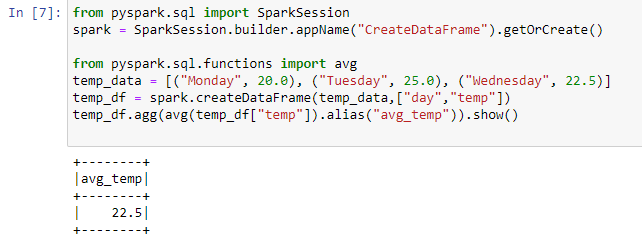

One simple change will make the code work. Let us change the spelling of the alias and try to rerun the program.

from pyspark.sql.functions import avg

temp_data = [("Monday", 20.0), ("Tuesday", 25.0), ("Wednesday", 22.5)]

temp_df = spark.createDataFrame(temp_data,["day", "temp"])

temp_df.agg(avg(temp_df["temp"]).alias("avg_temp")).show()Now we get the expected output, which is displayed below.

Conclusion

In this blog post, we learned about one specific cause of the “Column not callable” error in PySpark: misspelling the alias keyword in the agg function. This error occurs when you try to call a column as if it were a function. To fix this error, you need to correct the spelling of the alias keyword. We also saw an example of how to calculate the average value of a numeric column in PySpark without encountering this error.

Comments

Post a Comment