Difference between distinct() and dropDuplicates()

Difference between distinct() and dropDuplicates()

distinct()

Checks the entire row, if all columns are same between two or more rows, then it considers the first row alone. In other words, it returns distinct rows based on the values of all columns in the DataFrame.

dropDuplicates(subset:optional)

It is more versatile than distinct() as it can be used to pick distinct values from specific columns as well. When no argument is passed to dropDuplicates(), it performs the same task as distinct(), checking the entire row. However, you can also specify a subset of columns to consider when looking for duplicates.

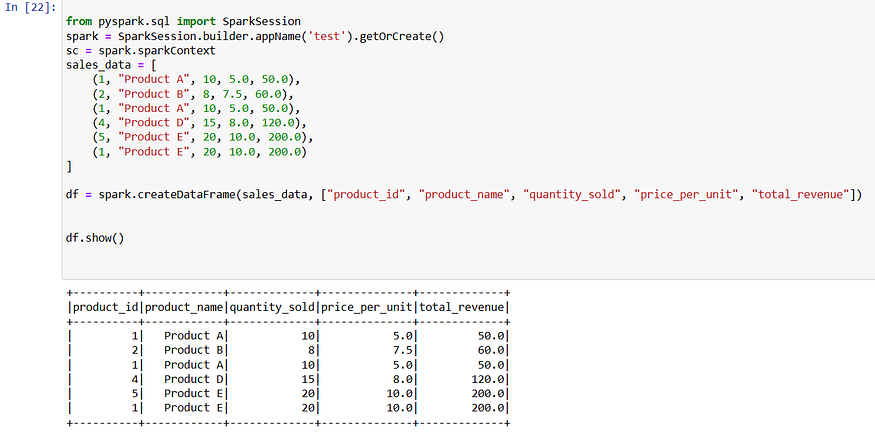

Example code:

distinct():

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('test').getOrCreate()

sc = spark.sparkContext

sales_data = [

(1, "Product A", 10, 5.0, 50.0),

(2, "Product B", 8, 7.5, 60.0),

(1, "Product A", 10, 5.0, 50.0),

(4, "Product D", 15, 8.0, 120.0),

(5, "Product E", 20, 10.0, 200.0),

(1, "Product E", 20, 10.0, 200.0)

]

df = spark.createDataFrame(sales_data, ["product_id", "product_name", "quantity_sold", "price_per_unit", "total_revenue"])

df.show()

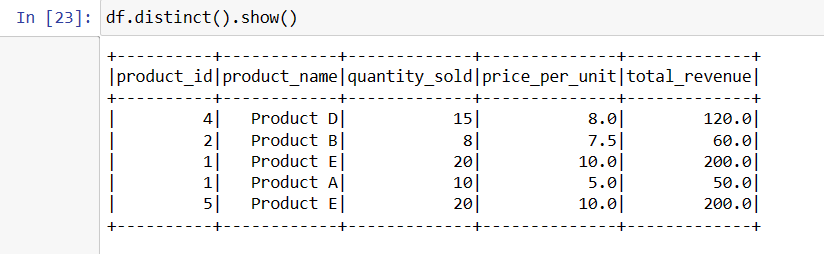

df.distinct().show()

If you see, only record with product_id 1 is not present. It is because the last record with product_id 1 has different values in other columns.

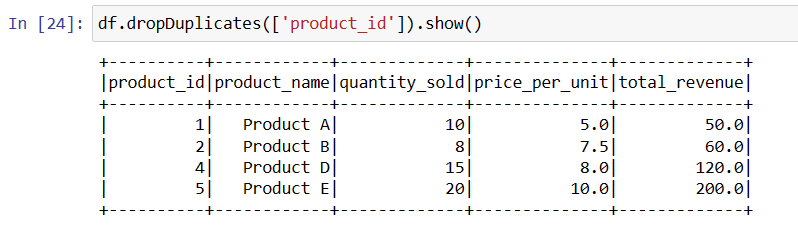

dropDuplicates()

df.dropDuplicates().show()This line performs the same task as the distinct, However we can pass subset here.

dropDuplicates(subset)

df.dropDuplicates(['product_id']).show()

If you run this query, only the first record with product_id 1 will be considered and other records will be neglected. This is because spark just checks for duplicates in the product_id column. We can even pass more than two columns inside the subset.

Conclusion

In summary, distinct() and dropDuplicates() are both useful methods for handling duplicate data in PySpark DataFrames. distinct() checks for duplicates in all columns, while dropDuplicates() allows you to specify a subset of columns to consider. Understanding the differences between these methods can help you choose the right one for your data processing needs.

Comments

Post a Comment